What is quantum computing?

May 30, 2025

Imagine trying to design a life-saving drug by predicting exactly how complex molecules will interact, or optimizing global shipping routes with millions of variables in real time. Classical computers—powerful as they are—struggle with problems whose complexity grows exponentially with scale. Now, imagine exploring every possible solution simultaneously, rather than one by one: that's the promise of quantum computing. By harnessing qubits—quantum bits that can exist in multiple states at once—these machines offer a fundamentally new paradigm capable of tackling intractable challenges today's computers simply can't touch.

Quantum computing isn't just a faster version of the computers we use daily; it's a completely different paradigm that leverages the often counter-intuitive yet powerful laws of quantum mechanics to solve problems currently too complex for classical systems. With a surge in investment, rapid technological advancements, and the tantalizing promise of breakthroughs in science, medicine, artificial intelligence, and finance, understanding what is quantum computing and how quantum computing works is more crucial than ever. In this article, we'll unravel the mysteries of quantum computing, exploring its fundamental principles, potential applications, the key players driving innovation, and the challenges that lie ahead on the journey into this new era of computation.

Beyond bits and bytes: understanding the quantum realm

To appreciate the power of quantum computing, we first need to understand the limitations of classical computers. Classical systems, from your smartphone to the most powerful supercomputers, operate on "bits." A bit is the fundamental unit of information and can represent either a 0 or a 1 – a definite, binary state, like a light switch that's either off or on. This binary system has served us incredibly well, powering the digital revolution.

However, for certain types of problems, the sheer number of possibilities classical computers need to check grows astronomically, making them impractical. Think of trying to find the optimal configuration for a complex molecule; the number of states to simulate can be enormous. This is where the quantum realm offers a new path forward. Instead of bits, quantum computers use qubits. A qubit, the heart of a quantum computer, can be a 0, a 1, or, crucially, a combination of both simultaneously thanks to a principle called superposition. Imagine a spinning coin: while it's in the air, it's neither heads nor tails but a blend of both possibilities until it lands. A classical bit is like a coin lying flat, definitively heads or tails. This ability for qubits to exist in multiple states at once allows quantum computers to explore a vast number of possibilities in parallel.

The power lies in three key quantum phenomena that give quantum computers their extraordinary capabilities. We already mentioned superposition that allows qubits to exist in multiple states simultaneously. Then there's entanglement, a phenomenon Albert Einstein famously (and somewhat skeptically) called "spooky action at a distance." When two or more qubits are entangled, their fates become intertwined. Measuring the state of one qubit instantly influences the state of the other(s), regardless of the physical distance separating them. Finally, quantum interference allows quantum algorithms to amplify the probability of "correct" answers while canceling out "incorrect" ones, much like noise-canceling headphones use destructive interference to eliminate unwanted sound.

How quantum computers actually work (the mechanics)

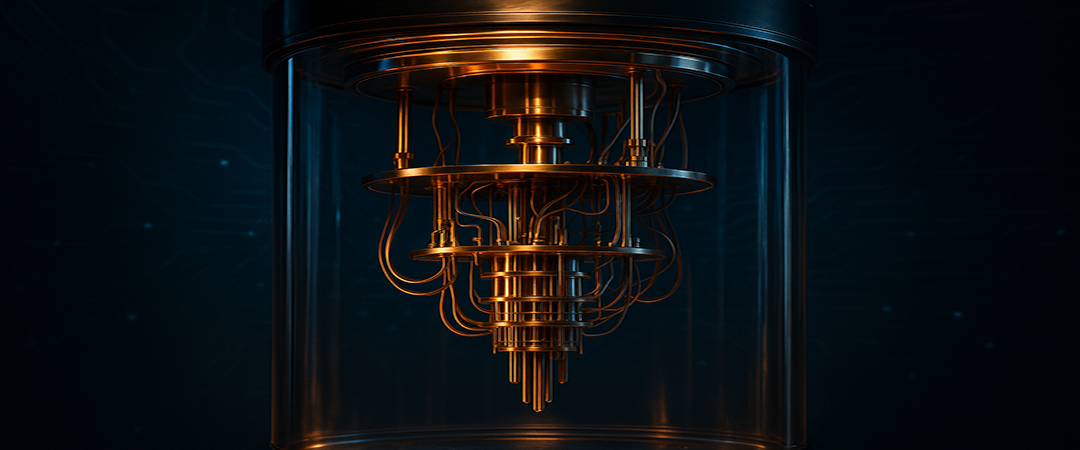

At a high level, building and operating a quantum computer involves harnessing these quantum phenomena. The qubits themselves can be realized in various physical forms, or "modalities." Prominent approaches include superconducting loops (favored by IBM Quantum and Google Quantum AI), trapped ions held in place by electromagnetic fields (used by IonQ and Quantinuum), photons (PsiQuantum, Xanadu), neutral atoms (Pasqal, QuEra), or the still-experimental topological qubits that Microsoft is developing. Many of these systems require extreme conditions to operate, such as temperatures near absolute zero and high vacuum, to protect the delicate quantum states of the qubits from environmental disturbances.

To perform computations, quantum computers use quantum gates, which are operations that manipulate the state of one or more qubits. These are analogous to classical logic gates (like AND, OR, NOT) but are often reversible and can perform more complex transformations, such as putting a qubit into superposition (e.g., with a Hadamard gate) or creating entanglement between qubits (e.g., with a CNOT gate). These gates are the building blocks of quantum algorithms – specialized sets of instructions designed to exploit superposition and entanglement. Famous examples include Shor's algorithm for factoring large numbers (which has implications for cryptography) and Grover's algorithm for searching unsorted databases. It's important to note that quantum computers don't speed up all tasks, but rather offer potentially significant advantages for specific problem types.

The final step in a quantum computation is "measurement." When we measure a qubit, its superposition collapses, and it settles into a definite classical state (a 0 or a 1). This measurement process is often probabilistic, meaning the algorithm might need to be run multiple times to determine the most likely answer. And also, the power of quantum computation is incredibly fragile. Qubits are extremely sensitive to their environment – any noise, vibration, or temperature fluctuation can cause them to lose their quantum properties, a phenomenon known as decoherence. This makes building stable, large-scale quantum computers a monumental engineering challenge and underscores the critical need for quantum error correction (QEC). QEC aims to detect and correct errors without disturbing the underlying quantum computation, a task far more complex than classical error correction.

The quantum computing stack consists of multiple layers working in harmony. At the hardware layer, qubits are housed in specialized environments like dilution refrigerators. The control system layer translates quantum algorithms into precisely timed microwave or laser pulses that manipulate the qubits. The software layer includes quantum programming languages and compilers that optimize quantum circuits. Finally, the application layer provides interfaces for solving real-world problems. Companies like IBM, Google, and Amazon now offer cloud access to their quantum computers, making this technology accessible to researchers and developers worldwide through platforms like IBM Quantum Network, Google's Cirq, and AWS Braket.

The quantum landscape: players, progress, and potential applications

The race to build practical quantum computers is well underway, featuring a diverse ecosystem of players. Major technology companies are heavily invested: IBM Quantum is advancing with processors like Condor (1121 qubits) and Kookaburra (1386 qubits) and its robust Qiskit software platform; Google Quantum AI is known for its Sycamore processor and ongoing research into quantum advantage; and Microsoft Azure Quantum offers cloud access to various hardware backends and its Q# language. Concurrently, specialized companies are making significant strides. IonQ and Quantinuum are pushing the boundaries with trapped ion technology, Rigetti Computing offers full-stack superconducting systems, PsiQuantum and Xanadu are pioneering photonic quantum computing, and Pasqal and QuEra are achieving impressive qubit counts with neutral atom platforms. This dynamic field is further enriched by numerous innovative startups, leading research universities (such as MIT, Stanford, and Waterloo), and significant government-backed National Quantum Initiatives worldwide, all contributing to the rapid pace of development.

We are currently in what's often described as the NISQ (Noisy Intermediate-Scale Quantum) era. This means today's quantum computers, typically ranging from 50 to over a thousand qubits, are powerful enough to perform computations beyond simple simulation but are still too "noisy" (prone to errors due to decoherence) and not large enough for full fault tolerance via quantum error correction. The focus in the NISQ era is on developing hybrid quantum-classical algorithms (like VQE and QAOA), where quantum processors work in tandem with classical supercomputers to tackle specific, niche problems where a quantum advantage – performing a task more efficiently or accurately than any classical computer – might be demonstrated. This is a crucial period for learning, experimentation, and exploring the capabilities of current hardware.

Once fully mature, the applications of quantum computing promise to transform numerous sectors. In drug discovery and materials science, quantum machines could simulate molecular structures and chemical reactions with unprecedented accuracy—driving the design of novel drugs, more efficient industrial catalysts, higher-capacity batteries, and advanced materials. An example is the 2021 partnership between Roche and Cambridge Quantum Computing to develop new treatments for Alzheimer's disease.

Optimization problems, common in logistics, finance, and scheduling, could see breakthroughs too; imagine optimizing global supply chains, designing more efficient airline routes, or managing complex financial portfolios with far greater insight. Goldman Sachs has developed quantum algorithms for derivatives pricing that could provide more accurate risk assessments in volatile markets. Portfolio optimization, a problem that grows exponentially complex with more assets, becomes manageable with quantum algorithms. JPMorgan Chase is exploring quantum computing for fraud detection, leveraging quantum machine learning to identify subtle patterns in transaction data that classical algorithms might miss. Many experts believe quantum computing will become an important tool in financial modeling and risk analysis.

Quantum machine learning (QML) is another exciting frontier, with the potential to enhance pattern recognition and data analysis for AI. Perhaps one of the most discussed applications is in cryptography: Shor's algorithm poses a threat to current encryption standards like RSA and ECC. This has spurred the urgent development of post-quantum cryptography (PQC) – new cryptographic methods resistant to quantum attacks – alongside research into quantum key distribution (QKD) for secure communication.

Cryptography and Cybersecurity face both challenges and opportunities from quantum computing. Shor's algorithm can theoretically break RSA encryption, the foundation of current internet security, by efficiently factoring large numbers. This has sparked a race to develop quantum-safe cryptography before fault-tolerant quantum computers arrive. However, quantum computing also enables ultra-secure communication through quantum key distribution, where any eavesdropping attempt disturbs the quantum states and alerts the communicating parties. The U.S. National Institute of Standards and Technology (NIST) has already standardized post-quantum cryptographic algorithms, signaling the urgency of this transition.

The road ahead: challenges, timelines, and preparing for the quantum future

Despite the exciting progress, building large-scale, fault-tolerant quantum computers is a monumental undertaking with significant hurdles. Scalability and qubit quality remain big challenges: we need not just more qubits, but high-quality qubits that are stable, well-connected, and have low error rates. A typical quantum operation has an error rate of 0.1–1%, compared to classical computers with error rates below 10-12. Achieving robust fault tolerance through effective quantum error correction is arguably one of the biggest scientific and engineering challenges, as it typically requires hundreds or thousands of physical qubits to create a single, reliable "logical qubit."

The practical challenges extend beyond pure technology. Quantum computers require specialized facilities with sophisticated cooling systems, making them expensive to build and maintain. Also, the field needs continued algorithm development to discover new quantum algorithms that can solve practical problems, as well as more sophisticated software and tools to make programming and using quantum computers accessible to a broader range of scientists and developers. Finally, workforce development is crucial – training a new generation of quantum engineers, physicists, and computer scientists is essential for sustained progress.

A common question concerns when quantum computing will become mainstream. It is widely acknowledged that quantum computing is a long-term endeavor, and it's important to manage expectations accordingly. We are already seeing near-term benefits, with cloud platforms like IBM Quantum, Amazon Braket, and Microsoft Azure Quantum providing researchers access to NISQ devices for experimentation and exploration of specific problems. However, the widespread impact on everyday life and broad commercial applications, particularly those requiring large, fault-tolerant machines, is likely still some years, if not decades, away.

Even with the longer timelines for some applications, the importance of quantum literacy cannot be overstated. Businesses, researchers, and individuals alike can benefit from starting to learn about quantum technology now to understand its potential impact and prepare for the changes it may bring. A critical immediate concern for organizations is data security. The development of post-quantum cryptography (PQC) is well underway, with bodies like NIST leading standardization efforts. Proactively planning for a transition to PQC will be vital to protect sensitive data from the future threat of quantum code-breaking.

Conclusion: embracing the quantum revolution

Quantum computing is more than just an incremental improvement; it's a fundamental shift in how we process information, leveraging the unique phenomena of the quantum world – superposition, entanglement, and interference – to tackle problems currently impossible for even the most powerful classical machines. It represents a new frontier in science and technology, with the potential to unlock unprecedented computational power.

While significant challenges in hardware, software, and algorithm development remain, the pace of innovation is remarkable, driven by a global community of researchers, tech giants, and ambitious startups. The transformative potential of quantum computing to revolutionize fields like medicine, materials science, finance, AI, and fundamental scientific research is widely considered immense.

Want to dive deeper into the world of quantum computing?

- Check out resources from IBM Quantum

- Explore the Qiskit Textbook for hands-on learning.

- Stay updated on Post-Quantum Cryptography efforts at the NIST PQC page.